Does This Explanation Help? How Lay Users Visually Attend, Trust, and Understand Local Explanations of Model-agnostic XAI Methods

- Date: 15.10.2021

-

Artificial Intelligence (AI) is playing an increasingly important role in high-stake domains. Nevertheless, the effectiveness of AI systems in these domains is limited by their inability to explain their decisions to human users. Recently, there has been a surge of interest in Explainable Artificial Intelligence (XAI) by researchers and practitioners seeking to increase the transparency of AI. In particular, extensive research in XAI has focused on developing model-agnostic methods that are capable of explaining local instances of any predictive model, which provides higher applicability and scalability due to their decoupling from the predictive model. However, the user perspective has played a less important role so far in XAI research resulting in a lack of understanding of how users visually attended and perceived model-agnostic local explanations. To address this research gap, we harmonized explanation representations from established model-agnostic methods in an iterative design process and evaluated them with lay users in two online experiments, a lab experiment using eye-tracking technology and interviews. The online experiments’ results show that the evaluated harmonized explanation representations were equally effective with respect to understandability and trust. Nevertheless, the lab experiment’s results indicate that lay users do not necessarily prefer simple explanation representations, and their preferences are contingent on their knowledge about AI. Our results suggest that there is not an ideal explanation type and selecting an appropriate explanation is context-dependent.

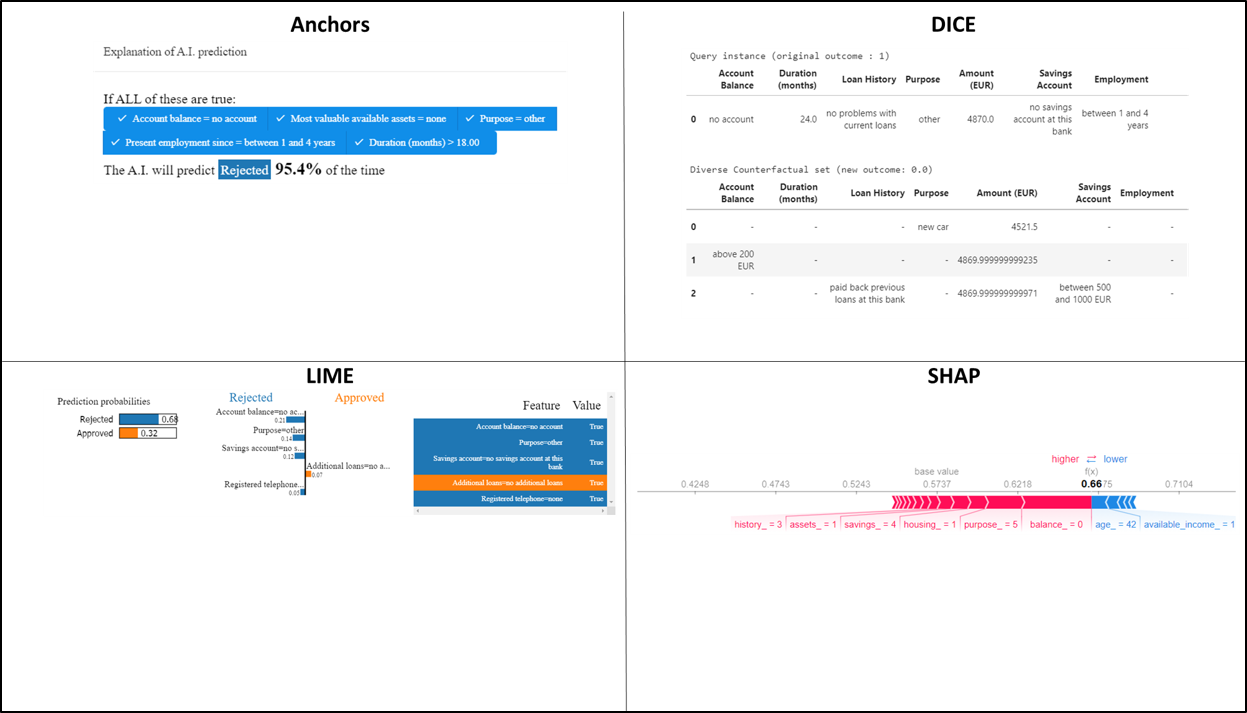

Original Design of representations of model-agnostic explanations for Anchors, DICE, LIME, and SHAP

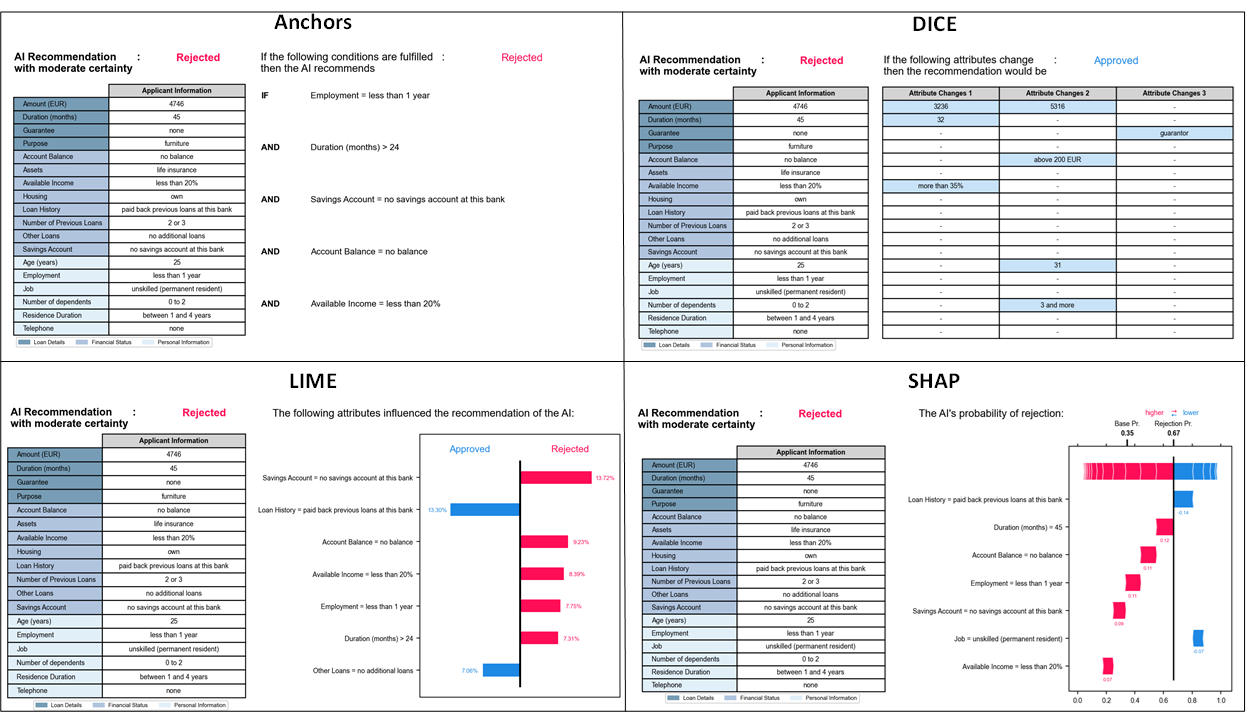

Original Design of representations of model-agnostic explanations for Anchors, DICE, LIME, and SHAP Design of harmonized representations of model-agnostic explanations for Anchors, DICE, LIME, and SHAP

Design of harmonized representations of model-agnostic explanations for Anchors, DICE, LIME, and SHAP